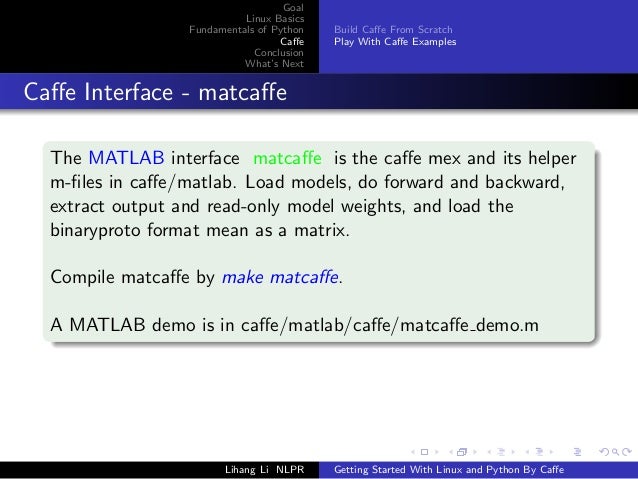

Caffe Matlab

Apr 18, 2016 - If you have Caffe compiled for Matlab (which you can do using make matcaffe ) then you can start following this simple tutorial. First you have to make sure Matlab can see caffe/matlab folder which would be something like /home/yourusername/caffe/matlab you can do that from your Matlab script using.

Our publication “”, showed how to use deep learning to address many common digital pathology tasks. Since then, many improvements have been made both in the field and in my implementation of them. In this blog post, I re-address the nuclei segmentation use case using the latest and greatest approaches.

Previous Approach Please refer to the which provides a tutorial of how the nuclei segmentation was performed in the previous version. As shown in the figure above, in that approach, Matlab solely extracted the patches and determined which fold of the evaluation scheme the belonged.

Subsequently, the tools provided by were used to (a) put the patches into a database and then (b) compute the mean of the entire database. Overall, we can see that this essentially requires manipulating each patch 3 separate times, the first time to generate, the second time to place it in the DB, and the third time to add its contribution to the overall mean. Clearly this wasn’t optimal, but at the time it was the most straight forward way as it limited the dependencies and leveraged tools which were already written. Additionally, this had another implicit constraint. We were using a ramdisk to hold the patches before converting them to a database because pulling them from disk was incredibly slow, so either we were (a) limited to the size of the ram of the machine or (b) had to accept that time penalty for using the disk. Revised Approach In the revised approach shown above, we can see that now we use Matlab to both extract the patches, immediately place them into the database, as well as compute the a mean in line with the patch generation process. Thus, in this implementation, we’re never required to pull the patch from disk, its created in memory, used for all purposes, and then written to disk.

In this way, it is also only manipulated a single time. Clearly a more optimized approach, but required additional code and dependencies. In this case, we use not only, which is provided by caffe making it straight forward, but also the caffe-extention branch of the project which provides a linux only wrapper for matlab to lmdb.

Besides this, the remainder of the approach (i.e., how to select patches, etc) remains the same. Additionally, we can now create database of unlimited size, since we’re writing them directly to disk (which is also greatly sped up through the usage of transactions and caching in the LMDB layer). Caffe’s tool is a one shot deal in the sense that it doesn’t allow for the addition to the database after its created, in particular the C line: db-Open(argv3, db::NEW); will throw an error if the database already exists. While this was acceptable before since we extracted all the patches ahead of time and “had no move to give”, the ability to add patches later on is now a reality since in Matlab we can specify to append to the database instead of overwriting. Not a huge deal (one could re-compile the code allowing for modification), but it’s a nice benefit of this approach. This approach has some additional code which generates ”layout” files, which show where the patches were extracted from on the original image.

They look something like this, where red pixels and green pixels represent locations of positive and negative patches which were extracted: In general, since we’re extracting sub-samples from a mask, it’s nice to get a quick high level overview of what is being studied. It also helps when working with students to be able to review the final input to the DL classifier without having to visually examine the patches themselves.

The code is fairly well commented, in particular one should review, which is where the actual DB transactions occur. Modified Network The network we use here is a bit larger (accepts patches of 64 x 64), and contains a few interesting features: 1) The addition of batch normalization layers before the activation layers, for more information see.

2) Removal of pooling units. We use additional convolutional layers to gently reduce the size of the input at each layer. Conceptually, I like this as it implies that we have the chance to learn an optimal “pooling” function to some extent. Ultimately though, I’ve tried these types of networks (with and without pooling), and have seen very little difference in the overall output. 3) Removal of fully connected layers and replacing them with convolutional layers. This implies that we can now directly use the network (after modifying the deploy file slightly to adjust for input image size) in the approach without having to do any network surgery. Notice, as well, there is still no padding on any layers, and in fact each layer size was chosen so that the output of the layer is an integer (usually padding is used to provide this “correction”).

4) we use a style approach in selecting the number of kernels, wherein, as the network gets deeper and the input size shrinks, we increase the number of kernels so that essentially each layer is computed in the same amount of time. This affords us a better ability at predicting how long training and testing will take.

This network sports 100k free parameters, which appears to be overkill for this process. In removing the “step” increases of kernels, and having a fixed kernel number of 8 at each layer (as opposed to 8, 16, and then 32 on the final layers), we saw little improvement in overall performance though that parameter space was a much smaller 10k. You can find the updated prototxt, and the visualization below (click for large version): Output Note here that the input patches are 64 x 64, which guarantees a larger receptive field that we need to operate over when doing the interpolation. For example, if we take the output, without any image re-scaling, interpolation, or effort to manage the large receptive field, we obtain a 251 x 251 image, which is “accurate”, but still at a 8:1 pixel ratio, so the boundaries are clunky: On the other hand, if we do basic interpolation, wherein we basically rescale the output (smaller) image up to the original image size, we see this type of result: In particular, we can see that it is a bit grainy and “boxy”, as a result of interpolating values between pixels which were actually computed. By computing more versions across the receptor field, ideally one for each pixel (in this case” displacefactor=8″ option in the ), we can see a much cleaner output, inline with what we’d expect: As reference, this is the original input (though compressed for easier web load): Of course this comes with the caveat of requiring additional computation, in particular 98 seconds versus 1.5 seconds for the singular approach. Unsurprisingly, we could have estimated this, as each image in the field takes 1.5 seconds, and now we have 8. 8 = 64 sub-images for a total of 1.5 seconds / image.

64 images = 96 seconds. The additional time is likely due to the requirement of interpolating the multiple images together. Future Advancements One aspect which is left is that the code which writes to the DB doesn’t currently support writing encoded patches. An encoded patch in this case is one which is in a compressed image format such as jpg, png, etc. This means that the caffe datums which are being stored contain uncompressed image patches, resulting in a larger than necessary database size.

Caffe Matlab Tutorial

While matlab-lmdb does support the concept of writing encoded datums, they need to be in a binary format, piped in through a file descriptor. Softlock license manager autodesk. This implies a necessity of writing a patch to disk in the appropriate format (e.g., png), and then opening a file descriptor to this file so that the exact byte stream can be loaded. Ultimately, a ramdisk could be used to do this, but I can’t see the additional code complexity to be worth it.

Although it would decrease the database size, it would likely also increase the training time, as each of the images would need to be decoded before being shipped to the GPU. By writing uncompressed images to the DB, we can forgo that whole process. After all, I delete the databases after each iteration of algorithm development, so its not really a good time investment to shrink them due to their ephemeral nature. Components of code available and Note: The binary models in the github link above were generated using nvidia-caffe version.14 and aren’t future compatible to future versions (currently.15). Post navigation.

Hello, thank you for sharing your code and great guidance. I am running in the last step (step5) using the command step5createoutputimageskfold.py./BASE/ 2 in which./BASE as your guidance. It contains a original image which need to segment.

Hi Andrew, Thanks for making all of your code available, and for documenting it all. I’m looking forward to trying it on some of my H&E data. I tried loading the Caffe model from the folder for this blog post (DL Tutorial Code – LMDB) in your git repo in python but am getting a shape mismatch error: Cannot copy param 2 weights from layer ‘bn1a’; shape mismatch. Source param shape is 1 1 1 1 (1); target param shape is 1 16 1 1 (16). The line I’m executing is: netfullconv = caffe.Net(“deployfull.prototxt”, “fullconvolutionalnet.caffemodel”, caffe.TEST) It’s the line from the python script in the same folder (makeoutputimagereconstruct-withinterpolation-advancedcmd.py), with the default arguments.

Are those the correct model and prototxt files I should use, or might there be something else wrong? Hi Andrew, thank you so much for all your help!

Caffe Matlab Path

I’m nearly able to complete all the step on windows and currently trying to create the image segmentation model on digits. Since the model I got from your github is no long compatible with the latest version of the caffe I wonder if you can suggest a way to make your layer file compatible? Basically the issue is with the Bad network: Not a valid NetParameter: 42:5: Message type “caffe.BatchNormParameter” has no field named scalefiller/biasfiller/engine: CUDNN thanks a lot! Thanks Andrew for the reply 😀 I deleted batchnormparameter for now and managed to go through the training. Now I’m at the one final step where I got the error: “invalid shape for input data points” at “interp = scipy.interpolate.LinearNDInterpolator( (xxall,yyall), zinterall) ” I think it might have to do with the dimention, or maybe with the additional things i added earlier “displacefactor = round(args.displace)-1” if I used the original script without ‘-1’ I get blob not matching error.

Caffe Matlab Invalid Mex-file

Do you think this can also be a python2-3 issue? Thanks a lot! The deploy file specifies what size the input will be resized to before feeding it into the network.

So if you pass in a large image, with the original deploy file, it will resize it down to a single patch and you will only get a single output. By having a network based on convolutions it can in fact take an image of any size and be run through without an issue, producing an image of a slightly smaller size. Try walking through the network mathematically hand and i think you’ll see it works without a problem. You can also check out FCNs, which are similar to this approach for additional reading. Dear Janowczyk, I followed the tutorial to do nuclei segmentation.